June 26, 2020

Note: This is the seventh in a series of blogs on using your assessment data to address learning gaps during the 2020–2021 school year.

When talking about assessment, educators often find themselves using medical analogies. It’s not uncommon, for example, to hear summative testing described as a “post-mortem,” in the sense that summative test results tell us what happened—but only after it’s too late to make changes. What would be beneficial is actually earlier and more frequent formative assessment, which we might compare to regular “check-ups” with the family doctor.

As we’ve noted in earlier blogs, formative and interim assessment will be extremely important during the 2020–2021 school year. Because of the learning loss resulting from this spring’s school closures, there is a heightened interest in more closely monitoring the progress of all students. This is very much like when a physician says, “We need to monitor you more closely due to risk factors.” The disruptions that affected schools this spring have surely created “risk factors” for many students. How do we monitor their performance and progress more closely in the new school year?

First, we should note that the topic of this blog is “monitoring progress” in a broad sense, not formal Progress Monitoring. We use capitalization here to refer to the specific process associated with tracking the performance and growth of students within formal Tier 2 or Tier 3 interventions as part of an RTI or MTSS model. This should not be confused with “progress monitoring” or “monitoring the progress” of all students through formative assessment tools and strategies.

The previous blog in this series also addresses progress monitoring, but it focuses specifically on discrete skills and the mastery level of tracking. This level of detail is specific to the daily practice of teachers, while this blog focuses on monitoring progress at a higher and broader level, one more appropriate for administrators. In the discussion that follows, we explain how, as an administrator, you can best keep your finger on the pulse of what is happening academically next school year, particularly in light of the “risk factors” to performance that so many students have experienced.

Before we begin, we want to stress that you can achieve an “early win” by gathering your baseline data now, as we describe in the third blog in this series. Spending a small amount of time pulling key metrics from 2019–2020 will give you an orientation and immediate insights once your first fall screening is over.

Two options for monitoring students’ progress

As we look to plans for the 2020–2021 school year, we’ll frame our discussion by making reference to two ends of a continuum. You’ll need to decide where your district falls on this continuum based on your systems and tools, your resources, and your data culture. Our goal is to present options; you are best positioned to decide which of these options makes the most sense for you.

Regardless of the option you choose, we suggest adhering to the typical screening windows of fall, winter, and spring for all students. This represents the standard schedule for physical exams. But beyond these regular “check-ups,” we’ll need closer monitoring of student progress.

Option 1: Monitoring daily reading and math practice

Digital practice programs were a tremendous benefit this spring, because they allowed students to continue to practice literacy and math skills online while school buildings were closed. If your district uses digital practice programs that report key metrics about student activity and progress, and if you’re actively using formative assessment resources in Star, then you’re receiving a continual flow of information. This information, if it is both regular and robust, provides a way to monitor student progress between your formal screening windows.

For example, districts that use Accelerated Reader and/or the myON digital reading platform receive a regular flow of information about student reading practice. If students are engaged in independent reading and are performing well on comprehension quizzes, there is little to be concerned about with regard to reading performance. Similarly, districts that use our Freckle ELA and Freckle Math programs receive ongoing insight into students’ activity and progress, both on independent practice and on teacher-assigned ELA and math activities.

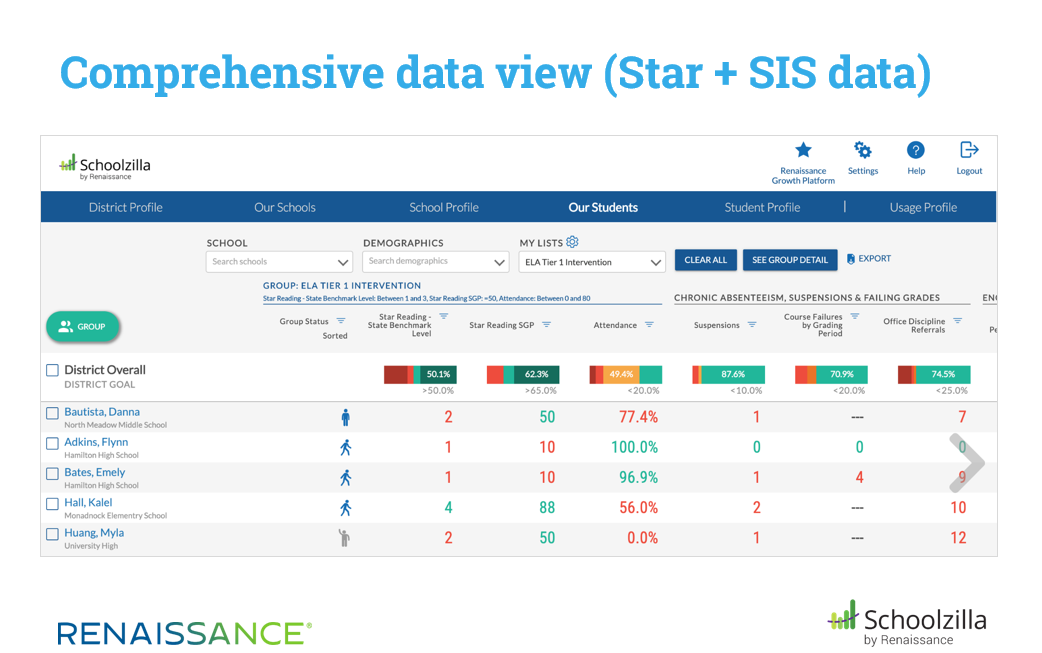

In our previous blog, we explain how the skills checks included in the Star Assessment suite can aid in assessing students at the mastery level. These tools represent a granular level of formative assessment that perfectly complements the computer-adaptive tests (Star Early Literacy, Star Reading, and Star Math). Also, with the recent addition of Schoolzilla to the Renaissance family, data from our practice programs can be presented to administrators in intuitive and dynamic ways, as described in more detail below.

The ultimate question to determine whether you fall on this end of the continuum is, Do you feel that you have a regular, robust, and sufficiently specific flow of data about the daily/ongoing work of your students? If so, the three standard screening windows plus this flow of data from your practice programs is likely all that you need to monitor progress.

Option 2: Adding a fourth screening window

If you do not have a regular and reliable flow of data about students’ daily practice, you may instead want to rely a bit more on your interim assessment tools. One possible approach is referred to as “3+1,” where the three typical screening windows (fall, winter, and spring) are supplemented by an additional screening that occurs in late fall (e.g., early November), between the standard fall and winter screenings.

The rationale behind this approach is that it:

- Expands your ability to make proficiency projections in Star (some reports require a specific number of test administrations to generate projections).

- Provides an additional school-wide opportunity to plot students’ performance against important benchmarks.

- Is an additional early check to ensure that students aren’t sliding.

- Provides updated instructional planning information.

That said, our cardinal rule around assessing students with Star is, Do not give the assessment unless you are actively planning to review and act on the results. Before adding a fourth screening, you’ll need to ensure that your data team structure has the capacity to support reviewing and acting on the data created by an additional screening window. If it does, then “3+1” is an option for you. If not, then there’s no point in scheduling an additional screening.

Another option is a “+1” window that is limited to certain students or grades. Given common accountability requirements around grade 3 reading proficiency, for example, and given the disproportionately high number of reading Focus Skills covered in grades K–2, you might choose to add the “+1” screening only for students in grades K–3. You might also limit the “+1” screening to only those students who were below benchmark during fall screening and who were not placed in a formal intervention. The additional screening would help to ensure that these students are not slipping in terms of performance.

When adding an additional screening window, it is important that your standard screening windows do not shift drastically. The point of “3+1” is to get an additional round of screening in before the middle of the school year. This provides sufficient time and data to adjust instruction and address any gaps while there is still time to impact student outcomes.

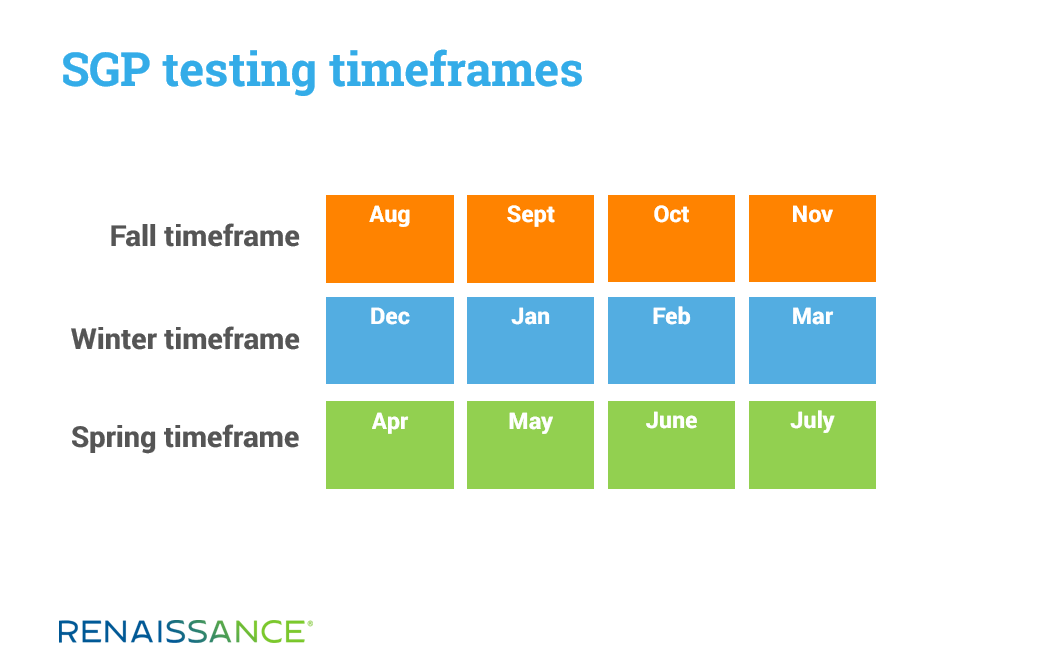

Also, remember that Star’s Student Growth Percentile (SGP) score requires that tests be administered within certain windows. Keeping these windows in mind when building your assessment calendar is important for making use of this key growth metric.

Putting it all together

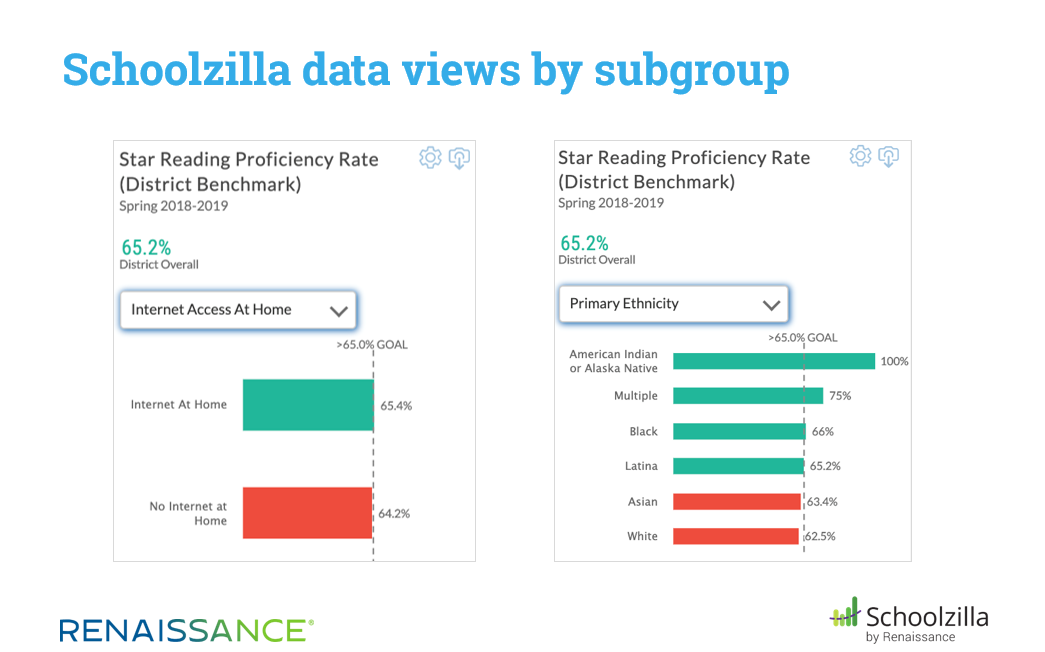

We noted earlier that Schoolzilla is now part of the Renaissance family. We’re excited about this addition because Schoolzilla allows you to disaggregate data by multiple demographics, to monitor longitudinal trends, and to create specific groupings of students to monitor more closely, based on a variety of “risk factors” (e.g., no home internet access, chronic absenteeism, reading below grade level, etc.).

For districts that do not already have platforms for data warehousing and visualization, Schoolzilla offers the ability to merge Renaissance data with the information in your student information system, state test data, and data from a variety of other systems for a comprehensive view of what’s happening across your district and in each of your schools.

We began this discussion with a reference to medical analogies, and we’ve seen how progress monitoring is like the regular physical exams (“check-ups”) that are designed to manage different risk factors. Rick Stiggins (2014) takes this analogy one step further. He contends that the pre-service training teachers typically receive on assessment is “akin to training physicians to practice medicine without teaching them what lab tests to request for their patients, or how to interpret the results of such tests.” We hope that this discussion of progress monitoring has shed light on the best “lab tests” for your students, and has provided guidance on how to interpret the results.

In our next blog—the last in this series—we’ll explore some final questions about the 2020–2021 school year, including the appropriate benchmarks to use. We’ll also discuss the potential impact as schools consider multiple options for delivering instruction (e.g., all remote, all face-to-face, or a hybrid model), and we’ll explain how to disaggregate your data based on these factors.

References

Stiggins, R. (2014). Revolutionize assessment: Empower students, inspire learning. Thousand Oaks, CA: Corwin.

Learn more

Ready for more insights? Read the final blog in this series for additional tips on Back-to-School planning.