July 10, 2014

Toward the end of my master’s degree program, I called my parents and excitedly announced that I was entering a doctoral program in psychometrics. A few uncomfortable moments later, my father responded, “That sounds…interesting.” Encouraged, I explained that a big part of my job would be assessing test scores for reliability and validity. More silence, followed by my mother piping up about her latest adventure. I knew I had lost them at “psychometrics,” and you may ask, “So?” Well, I admit that the word psychometrics hardly makes people jump for joy, but it can actually be quite fun! It’s also becoming an area more and more educators are expected to understand as they choose the best assessments for accountability and informing instruction.

Let’s talk reliability and validity as crucial considerations in determining the quality of tests. What first comes to mind when you think of the words reliability and validity in general? You might think of your reliable car, and that valid argument you made while discussing something over dinner with friends. In fact, reliability and validity are just ordinary words to most people. With the important role that assessments continue to play in K12 education, most educators are now more familiar with the technical underpinnings of these terms, but throw in mathematical equations with squiggly notation and that sense of calm is sucked out of the room. Equations aside, the message around reliability and validity is surprisingly clear.

Reliability and validity, demystified

Take the widely used example of the bathroom scale. If you repeatedly step on the scale, you get the same reading. You could say the weight measurements are consistent—or reliable—because the scale shows the same weight each time you step on it. With educational tests, we say that test scores are reliable when they are consistent from one test administration to the next. By definition, reliability is the consistency of test scores across multiple test forms or multiple testing occasions.

Now, suppose this same bathroom scale is off by 5 pounds. Because the scale is reliable, you still get consistent weight measurements every time you weigh yourself, but the measurements are not accurate because they are off by 5 pounds! In this case, although the recorded weights are reliable, they are not valid measures of how much you weigh.

Conversely, if the scale were calibrated just right, you’d get a weight measurement that is both reliable and valid, each time. In the context of educational testing, validity refers to the extent to which a test accurately measures what it is intended to measure for the intended interpretations and uses of the test scores.

How are reliability and validity related?

Simply stated, reliability is concerned with how precisely a test measures the intended trait; validity has to do with accuracy, or how closely you are measuring the targeted trait.

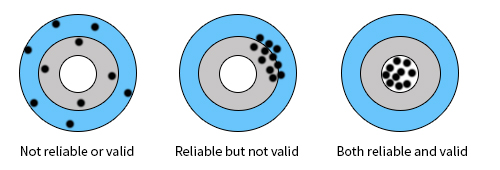

In order to be valid, a score must be reliable. However, just because a score is reliable does not mean it’s valid. The three wheels below help to drive this point home. If you think of the innermost circle as your true weight measurement, you’ll notice that the first wheel has weight recordings that vary wildly each time we step on our bathroom scale; it’s clear the weight measurements are not reliable—and thus not valid. The second wheel shows reliable but not valid weight measurements that might come from that sneaky scale that is off by 5 pounds. Lastly, only the properly calibrated scale will give us both precise and accurate weight measurements as shown in the last wheel. Because reliability is a necessary requirement for validity, we commonly confirm reliability before collecting validity evidence.

If reading this has piqued your interest, you’ll be glad to know that defining reliability and validity is only the beginning. In future blog posts I will use examples from the Star assessments to delve into different types of reliability and validity you may have heard or read about.

Curious to learn more? Click the button below to explore Renaissance Star 360®.