August 6, 2015

“Just what do you do with all that data?”

A superintendent asked me this question the other day, and I understand where she’s coming from. The educators in her district have put faith in Renaissance products. She is responsible for the students who complete the assignments, practices, and assessments that populate our databases.

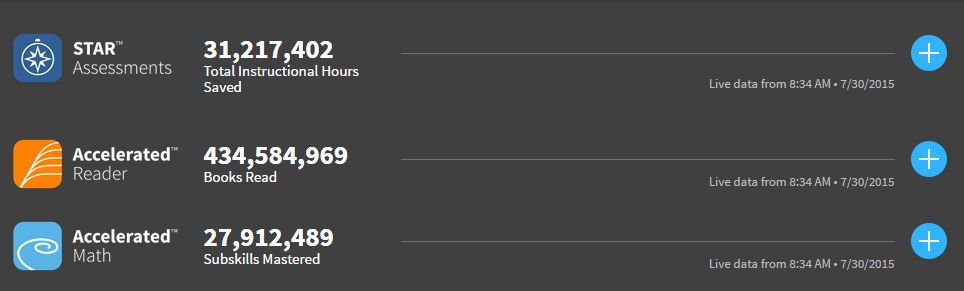

Massive databases. The Data page on our website provides a live count of the data we receive and store from schools across the US, Canada, and the United Kingdom. During the 2014–2015 school year alone, we captured about 70 million achievement tests, feedback on 400+ million books and articles read, and results from 27 million mastery assignments in mathematics. In August, the data ticker will reset for the 2015–2016 school year, and I’m betting we’ll surpass those marks over the course of the next school year.

At Renaissance, we encourage teachers to use the data our solutions provide to make instructional decisions. We know that students benefit when their teachers receive timely, accurate information about what kids know and like, as well as about the topics they struggle with or are ready to learn next.

Besides housing and safekeeping this information so educators have access to both current data and historical context, we also work to give this information back to educators in another way.

Every bit of information we maintain is used to share actionable insights with educators, parents, and students. We collect and analyze data with the goal of improving educational outcomes, using the data to try to squeeze out every possible insight about learning and teaching. What we find is shared as insights with educators, and it serves to shape our development decisions.

Just as important, Renaissance is deeply committed to the protection of school and student data. In all we do, we go to great lengths to provide aggregated data that is useful to educators, parents, and researchers while stopping well short of releasing information that could be used to identify any district, school, teacher, or student.

Here is just a sample of what we do with all of this data:

- Learning progressions for reading and math provide a road map of where individual kids have been and the path they need to take next. Using a student’s score, educators can drill down and see which topics a student has mastered, as well as those they still need to work on to become proficient. Differentiating learning experiences in this way is nearly impossible to do manually. Our achievement data supports empirical validation and ongoing fine-tuning of these progressions. With accurate, up-to-date assessment data, every student can be placed at the correct starting point in the learning progressions and move forward from there.

- Fidelity and best practices. We use data to review the extent to which teachers and students use our programs, and we try to make software changes that encourage even better implementation integrity. Why? Because years of research on implementation in both reading and mathematics have shown that when our solutions are used in a certain way, students are more likely to benefit and grow at an accelerated rate.

- What Kids Are Reading. This annual report and searchable website represents the world’s largest survey of K–12 student reading behavior. Captured from data on millions of students, this information about popular books read by grade, gender, and book characteristics can help students search for engaging books to read. This data also informs the personalized Discovery Bookshelf inside Renaissance Accelerated Reader®

- Renaissance Star Assessments® are profoundly shaped by student data that informs item calibration, score norms, and links from Star to state summative and other assessments. In addition, psychometricians continually evaluate Star results for indicators of technical adequacy, which ensures the assessments are consistently measuring what they are intended to measure. Likewise, student growth percentiles (SGPs), which are reported in Star, require a large amount of historical data to understand what growth looks like by subject, grade, and type of student. SGPs help educators answer key questions such as, how is my student growing relative to academic peers? And, how likely is a particular student to catch up to a level of proficiency on the state test?

And that’s just the beginning of what we do—we’ve really only just scratched the surface. Our first priority remains to encourage and assist educators in using their data to make sound instructional decisions and effect change in their classrooms. Beyond that, we strive to practice what we preach. Just as we ask teachers to use their data, we endeavor to use the data we gather to impart as much insight as possible on issues affecting teaching and learning and to further the development of our solutions.

We know the potential that lies in this data, so we continually examine it for other topics to research and share. Is there a research question you’d like to use data to explore? How do you use data to inform instructional decisions? Share your ideas with us below in the comments.