March 12, 2015

What does it really mean to be customer-centric? Lots of companies claim to be customer-focused because it is an easy thing to say. Renaissance is grounded in our mission—to accelerate learning for all. We care about the teachers we serve. We work hard because we want to help students learn. But does that alone make us customer-centric?

In our business—creating great educational software—success hinges on the user experience. When using our tools, teachers need an experience that delivers value, is easy to use, is designed well enough to engage, and is engaging enough to motivate continued use.

Being customer-centric means looking at every touch point as scientifically as possible to create experiences that work. This constant testing for value is called “validation.” If you aren’t validating, you really aren’t customer-centric. Caring isn’t enough. Hard work isn’t enough. To deliver value, you have to always be validating.

Over time, we’ve ramped up both the depth and breadth of validation we do. How does this help you, the Teacher?

Out of nearly 1,000 employees at Renaissance, roughly 80 create new code and content, manufacturing our product development roadmap. Validation helps to ensure that these resources are dedicated exclusively to work that has high value for you. Last summer, we conducted a range of surveys and focus groups on a dozen different applications we might build. This scientific exploration of buyer attitudes helped us to prioritize where we will focus our energy. We focus our energy on building what teachers want and need, instead of wasting your (and our) time.

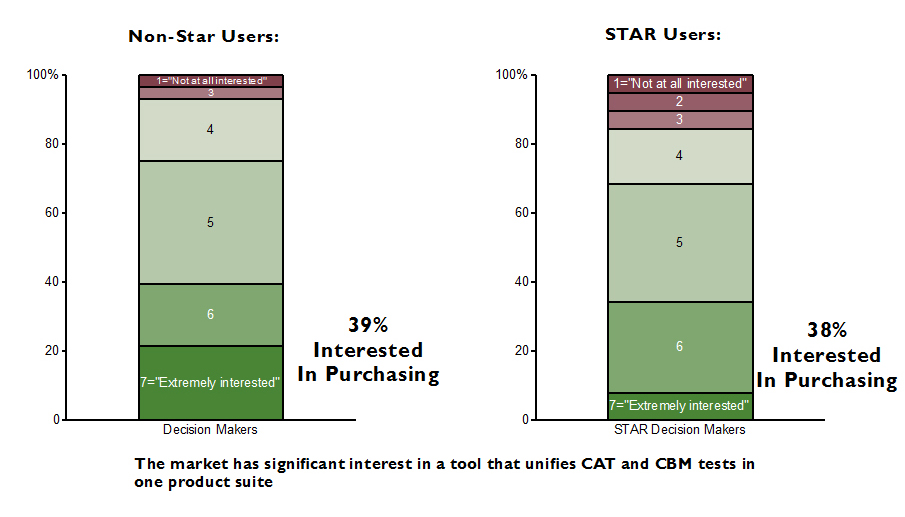

In some recent validation studies, depicted in the graph below, we found that about 40 percent of assessment decision makers are interested in unifying CAT (computer-adaptive testing) and CBM (curriculum-based measurement). This discovery will help shape our roadmap investments.

Of course, validation most significantly impacts and influences design and development. Over the lifetime of the work done toward releasing a new feature (called an “epic”) up to 10 validations occur. Work is typically done at the storyboard level first to identify key features. Usability testing then shapes development, identifying teacher preferences for everything from color to layout.

The overall goal of validation is to ensure that—with whatever we ship—we have exceeded the threshold of “minimum viable product” (MVP), and that we are solving an important problem for the teacher.

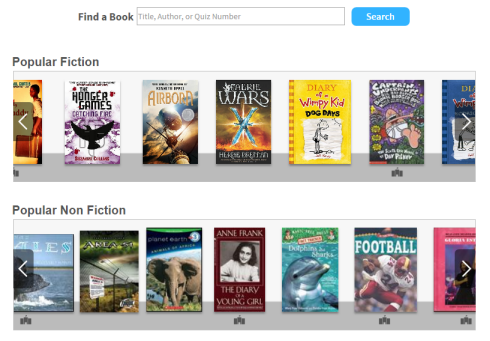

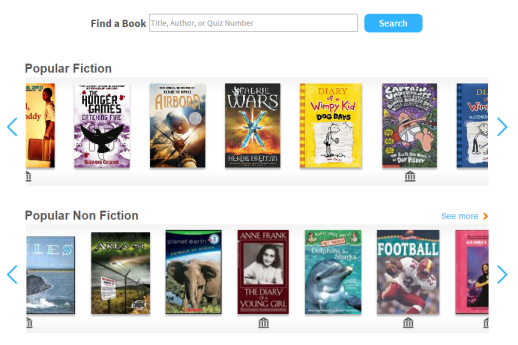

Here’s a simple example of how validation works. Just below is a screen showing the cover flow for Accelerated Reader (AR) Student Book Discovery, as originally conceived:

As the team in Minneapolis worked this epic, their design and validation owners noticed that students weren’t always spotting key elements of the navigation. So, we moved the scroll arrows outside the book covers, lightened the shelf cover, and rendered the Library icon more prominently, as you can see in the screen below:

In ways large and small, validation creates a direct pipeline between our users and our products, enabling their needs and preferences to control our work.

One of the questions I’m asked most often is how can we still have user complaints and calls in the wake of so much validation. When you actually sit in focus groups and 1:1 usability reviews, the answer to that question becomes clear. Whenever we sit down with users, we get a lesson in “one size does not fit all.” People have very different tastes, navigation styles, computer literacy levels, and learning approaches. No amount of testing creates a user experience (UX) that works for everyone. (You know this better than we do, because you look out at each of your classes toward a roomful of unique learners every day!)

Here’s a great example: Even the basic idea of book discovery in AR isn’t a hit with every student. While 75 percent very much liked the idea of “Top Books For You,” about 15 percent didn’t want it, with the remainder being indifferent. When, as Renaissance does, you have millions of users, even incredibly valuable features for most will be distasteful, unusable, or extraneous for some. The test of “goodness” is not whether everyone likes a new capability, but whether in aggregate there is a meaningful advance in the overall value of the offering.

And, that brings us to validation after the software ships. There is a nasty lesson I’ve learned over thirty years, accounting for a reasonable percentage of my grey hair. No version 1 software is perfect. There is a reason Google is famous for hanging “Beta” on everything. There is a reason no one remembers Windows v1.0. There is a reason so-called “early adopters” amount to just two percent of all software buyers. There is a reason 70 percent of Silicon Valley entrepreneurs achieve an exit value of essentially zero for their start-ups. Bootstrapping innovation is incredibly hard, shipping new software is difficult, and even getting a small new feature right can be a challenge.

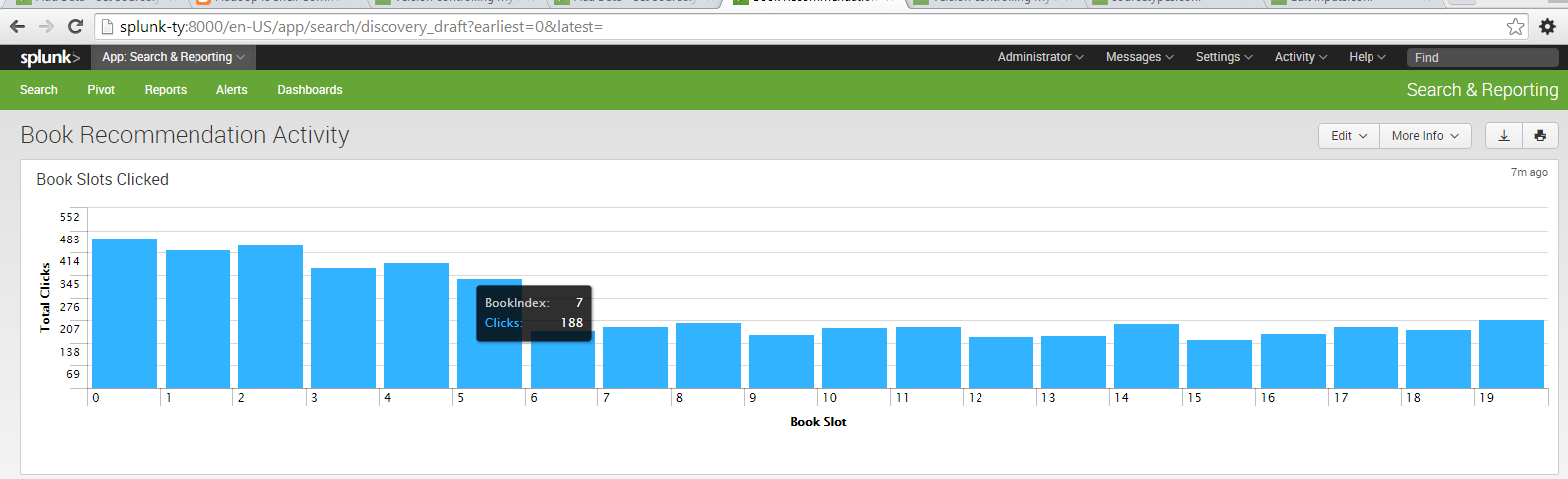

So, how do we identify and deal with the inevitable mistakes and less-than-perfect code? The answer is even more validation. But, after shipping, new kinds of “checking” become possible. Once users are actually using the product, we can use “clickstream” analysis to see what they are doing and where they are going wrong. At Renaissance, we’ve had to make a huge investment to get into the clickstream game. While folks at Facebook run 1000 “A/B tests” a day, until recently we’ve had very little real-time data about how users are actually interacting with Renaissance applications.

Now data is flowing. R&D teams are able to use a tool called “SPLUNK” to explore millions of application instrumentation events, creating new insight into how our users are touching our features.

Above, you see an hour in the clickstream life of AR Book Discovery, showing which “slot” in the shelf users are clicking on.

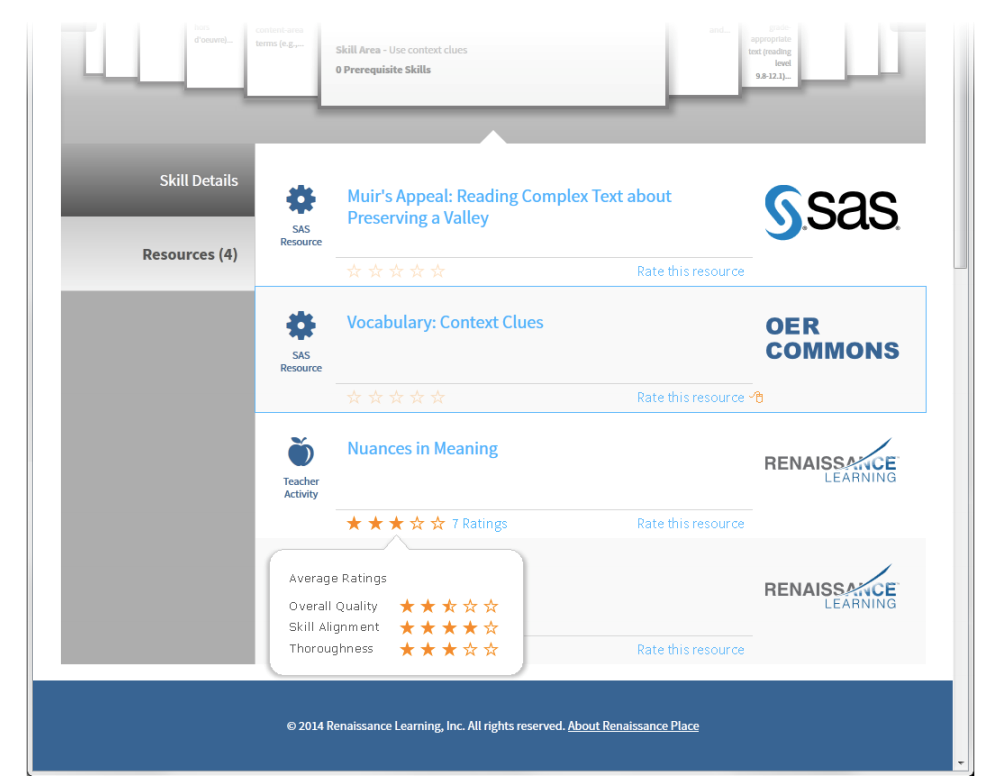

Can you call yourself customer-centric without knowing what users think of the content you are showing them? Probably not, so we are about to introduce validation by reputation. The power of reputation has revolutionized product experiences. Whether it comes to knowing the reputations of drivers on Uber, movies on Netflix, or instructional resources on TeachersPayTeachers, validating goodness via the wisdom of the crowd has enormous power. The screen below is an example of how we’ll capture user ratings to track the reputation of content available in our platform.

By using clickstream data, post-release surveys, reputation, and more, we can pinpoint problems with newly shipped code and content, driving constant improvement more efficiently.

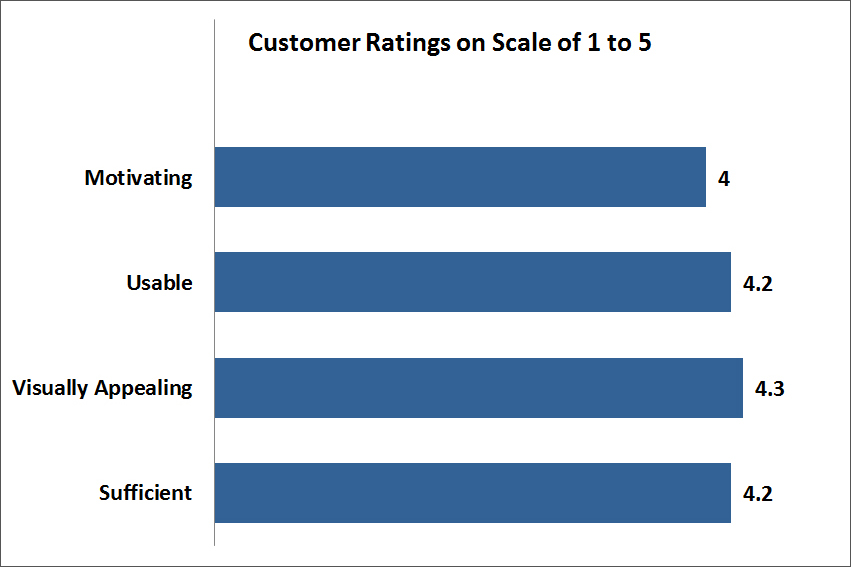

Success hinges on the quality of the user experiences (UX) we deliver. In last fall’s customer survey, for the first time, we asked about the quality of our UX. We’re pleased to report the results were pretty good.

We can and we will get even better. We’re new at validation, but we’re learning fast. Over time, we’ll grow our ability to focus on the right work, discover MVP before we ship, and fix our errors quickly post-release. Validation is destined to be our teachers’—your—best friend.